Comparison of the accuracy of VGG16 and ResNet50 for image classification

Table of contents

No headings in the article.

Introduction

There are several convolutional neural network (CNN) architectures developed for image classification tasks. The first CNN architecture, LENET was developed in 1999, and was trained on MNIST dataset for handwritten digit recognition. Overtime, other pretrained models such as ALEXNET in 2012, VGG in 2014, and RESNETS in 2015 were developed, and trained on ImageNet datasets. These pretrained models utilize different filter sizes and convolution layers; activation functions; and fully connected layers (dense layers). Thus, the aim of this project is to compare the accuracy of two commonly used architectures - VGG16 and RESNet50 in classifying images by utilizing their weights trained on ImageNet dataset.

Getting started by importing VGG16 model

Using Tensorflow2 with Keras, we will import VGG16 model with the code below:

from tensorflow.keras.applications.vgg16 import VGG16 #imports VGG16

from tensorflow.keras.preprocessing import image #image preprocessing

from tensorflow.keras.applications.vgg16 import preprocess_input, decode_predictions #image label prediction

import numpy as np

model = VGG16(weights='imagenet') #loads the weights

model.summary() #summarizes the attributes of the model

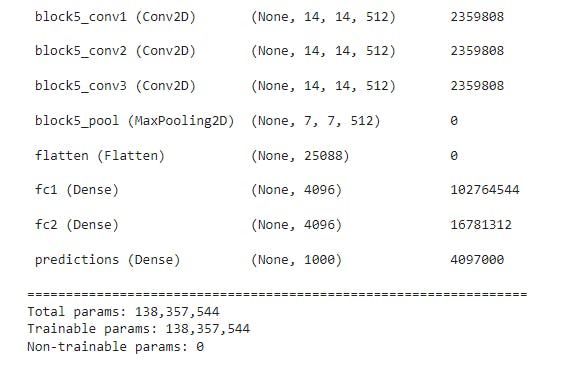

From the image above, VGG16 has 13 convolution layers, 3 dense layers, and 138,357,544 parameters.

Download and load test images

We will download a zipped file which contains the test images (inputs). The images are extracted and preprocessed before feeding them into the model.

!wget https://moderncomputervision.s3.eu-west-2.amazonaws.com/imagesDLCV.zip #downloads the file

!unzip imagesDLCV.zip #unzips the file

To extract the images from the file:

import cv2

from os import listdir

from os.path import isfile, join

#Get images located in ./images folder

mypath = "./images/class1/" #path of the folder containing the images

file_names = [f for f in listdir(mypath) if isfile(join(mypath, f))]

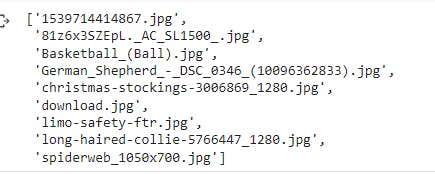

print (file_names)

Feeding the inputs into the model

import matplotlib.pyplot as plt

fig=plt.figure(figsize=(16,16))

all_top_classes = [] #saves the predicted label of each image

# Loop through images

for (i,file) in enumerate(file_names):

# image preprocessing

img = image.load_img(mypath+file, target_size=(224, 224)) #minimum input size

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

#load image using opencv

img2 = cv2.imread(mypath+file)

#imageL = cv2.resize(img2, None, fx=.5, fy=.5, interpolation = cv2.INTER_CUBIC)

# Get Predictions

preds = model.predict(x)

preditions = decode_predictions(preds, top=5)[0]

all_top_classes.append([x[1] for x in preditions])

# Plot image

sub = fig.add_subplot(len(file_names),1, i+1)

sub.set_title(f'Predicted {str(preditions)}')

plt.axis('off')

plt.imshow(cv2.cvtColor(img2, cv2.COLOR_BGR2RGB))

plt.show() #outputs the images with their predicted labels

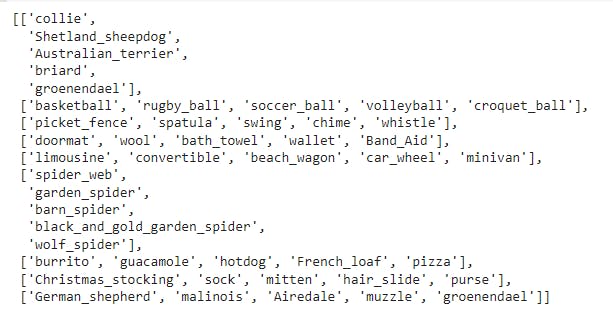

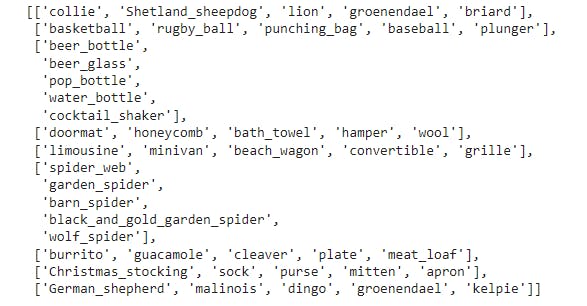

We will view the top 5 labels of each image predicted by printing all_top_classes

Creating ground truth label

We will create a list which contains the true label for each image. The list would contain the labels in the order they were predicted by the model.

ground_truth = ['collie','basketball','beer_glass','doormat','limousine','spider_web','burrito','Christmas_stocking','German_shepherd']

Create a function that outputs the accuracy of the model

Since we have a list of the top 5 predicted labels for each image, we will compare these labels to the ground truth label. In other words, we will check if the ground truth label for each image falls within the top-5 predicted labels.

def getScore(all_top_classes, ground_truth, N):

# Calcuate rank-N score

in_labels = 0

for (i,labels) in enumerate(all_top_classes):

if ground_truth[i] in labels[:N]:

in_labels += 1

return f'Rank-{N} Accuracy = {in_labels/len(all_top_classes)*100:.2f}%'

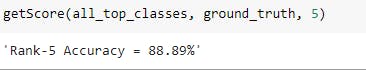

print(getScore(all_top_classes, ground_truth, 5))

Importing ResNet50 for image classification

We will load the model in a similar way.

from tensorflow.keras.applications.resnet50 import ResNet50

from tensorflow.keras.preprocessing import image

from tensorflow.keras.applications.resnet50 import preprocess_input, decode_predictions

import numpy as np

model = ResNet50(weights='imagenet')

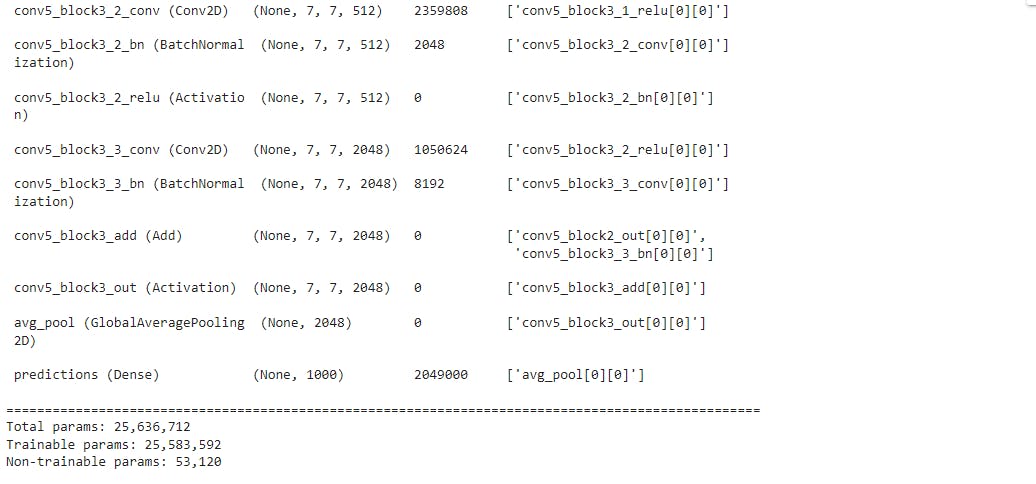

model.summary()

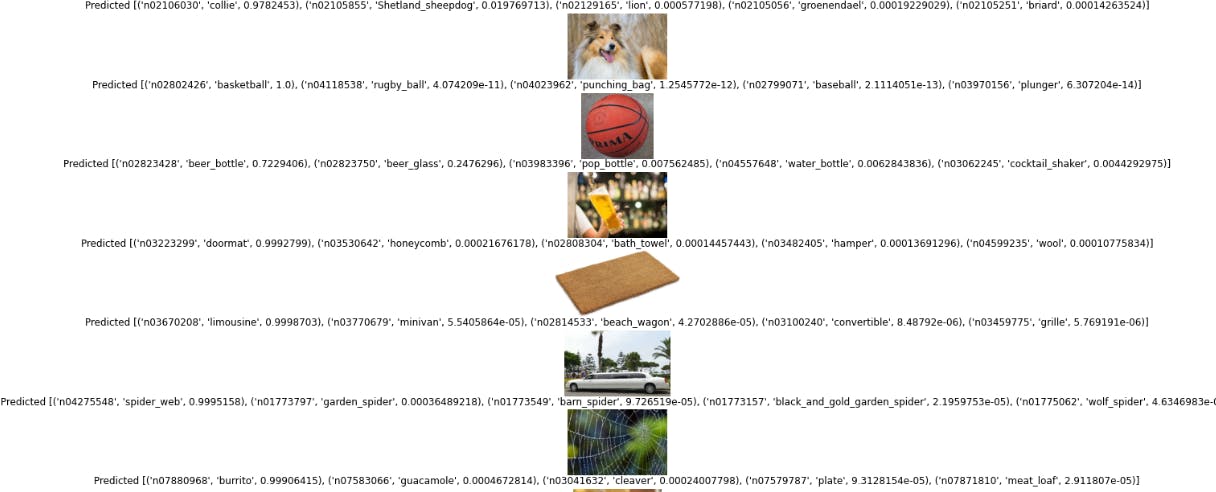

Feeding the inputs into the model

import matplotlib.pyplot as plt

fig=plt.figure(figsize=(16,16))

all_top_classes = []

# Loop through images run them through our classifer

for (i,file) in enumerate(file_names):

img = image.load_img(mypath+file, target_size=(224, 224))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

#load image using opencv

img2 = cv2.imread(mypath+file)

#imageL = cv2.resize(img2, None, fx=.5, fy=.5, interpolation = cv2.INTER_CUBIC)

# Get Predictions

preds = model.predict(x)

preditions = decode_predictions(preds, top=5)[0]

all_top_classes.append([x[1] for x in preditions])

# Plot image

sub = fig.add_subplot(len(file_names),1, i+1)

sub.set_title(f'Predicted {str(preditions)}')

plt.axis('off')

plt.imshow(cv2.cvtColor(img2, cv2.COLOR_BGR2RGB))

plt.show()

Getting the top-5 predicted labels for each image:

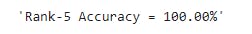

Calculating the accuracy of the model:

def getScore(all_top_classes, ground_truth, N):

# Calcuate rank-N score

in_labels = 0

for (i,labels) in enumerate(all_top_classes):

if ground_truth[i] in labels[:N]:

in_labels += 1

return f'Rank-{N} Accuracy = {in_labels/len(all_top_classes)*100:.2f}%'

print(getScore(all_top_classes, ground_truth, 5))

Conclusion

The two models (VGG16 and ResNet50) have different accuracy results. The rank-5 accuracy of VGG16 for classifying images was 88.89%, while the rank-5 accuracy of ResNet50 was 100%. Thus, ResNet50 was better suited for the image classification task. There were other differences between the two models such as the size of the parameters with VGG16 and ResNet50 having 138,357,544 and 25,636,712 parameters respectively. Over the years, developers utilize different hyperparameters (activation function, learning rate, loss function) in building a model for image classification. Hence, it is important to examine different models during image classification to obtain the best result.